Curious about building data warehouses but don't know the best practices for designing one for your organization or company? Then this article is for you.

In this article, we will go through the best practices for building a data warehouse that data engineers or scientists follow.

Data warehouses are data systems designed for data management and query optimization in large data repositories. Typically, businesses host transactional databases containing daily activities in addition to auxiliary data sources for critical operations. With a data warehouse, organizations collate multiple data sources in a single data warehouse for centralized data processing and analytical querying, reporting, and rapid decision making. Data engineers working with data warehouses use extract transform and load (ETL) processes to collate and store data in a single data warehouse.

The need to shift analytics from traditional databases and the emergence of OLAP processing techniques that use columnar storage led to the development of the cloud data warehouse. Examples of cloud data warehouses are AWS Redshift, Microsoft Azure SQL Data warehouse, Google BigQuery, Snowflake.

Logging

Logging is one of those best practices most data engineers and data warehouse managers ignore, but it plays an important role in data warehousing. Setting aside a centralized repository where logs can be visualized and analyzed can help with rapid debugging and the creation of a robust ETL process.

Building a Data Warehouse Design on the Implementation

Data engineers and data warehousing managers always have a specific business use case in mind when building a data warehouse. Therefore, it is ideal to have a diagram of how the data should flow. This is usually done using a flow diagram. It helps reduce errors and enables faster implementation of the design.

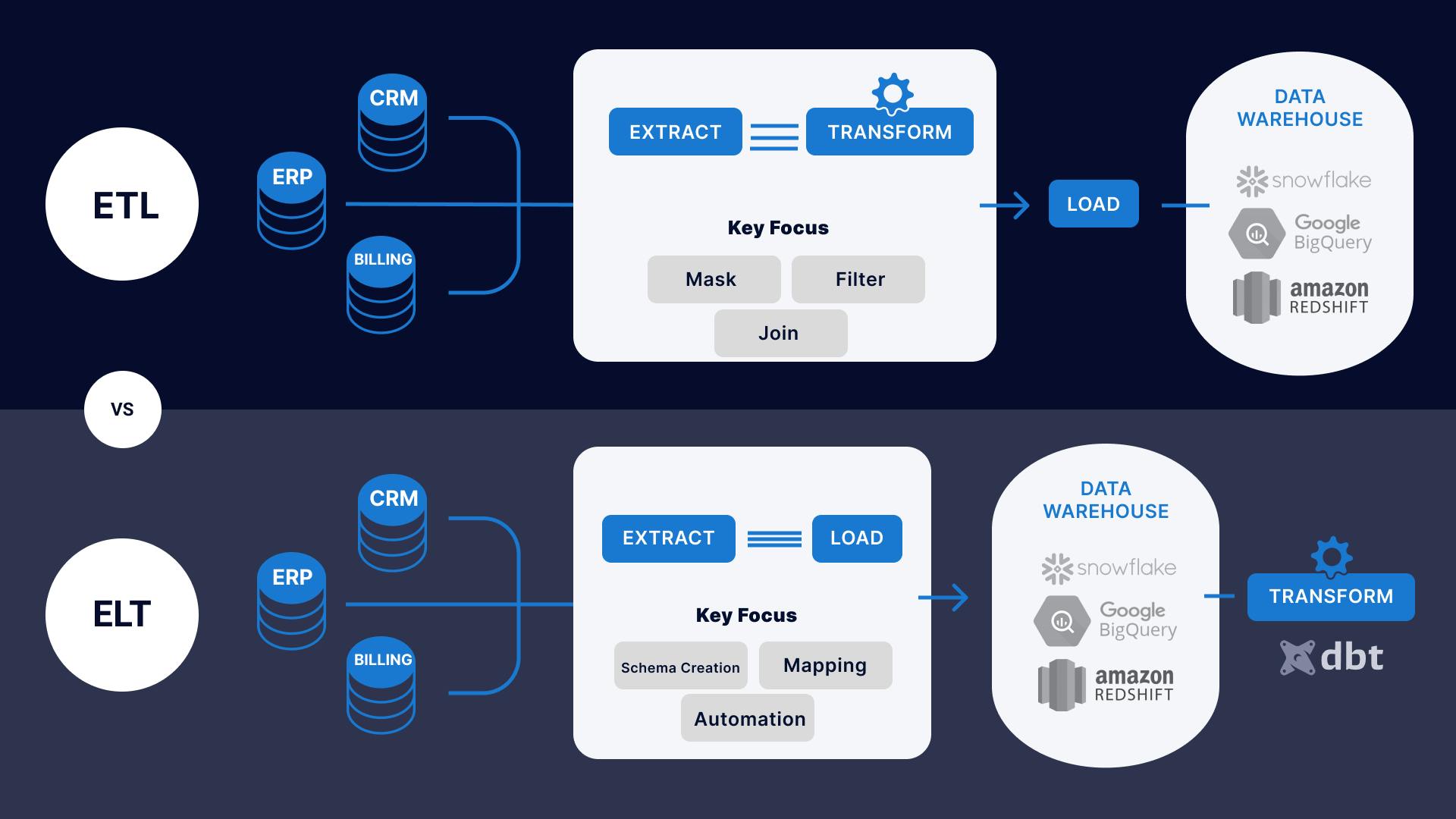

ETL & ELT

In designing data migration from a database, i.e., OLTP, data engineers tend to use a variety of tools for migration and integration. That's where we use ETL (Extract, transform, load) and ELT (Extract, load, transform) tools, based on whether you are working on the cloud or on-prem. For cloud data warehouses, it's recommended that you use ELT, and ETL is mostly used locally, though it still works on the cloud.

Therefore, the best type of tool is recommended based on the type of data warehouse to be implemented.

Architecting Data Warehouse

Data engineers design a data warehouse for business intelligence and online analytical processing in accordance with standardized guidelines. It's, therefore, necessary to develop your architecture by using the right schema: the Star schema or Snowflake schema.

Loosely Decoupled

Cloud data warehouses use loosely decoupled design architectures. Cloud data engineers essentially consider business expansion possibilities during the data warehouse design process. A loose decoupled storage-compute architecture accommodates changes in future business development and supports configurations for business changes. Database users can introduce clusters with data subsets. With decoupled data designs, users can remove or add resources.

Scalability

Creating a scalable cloud data warehouse is another best practice. Scalability in any cloud data warehouse is a critical consideration. It is often expected that data will grow exponentially with time in an organization. As a result, having an efficient, scalable cloud data warehouse allows you to store a large amount of data. Scalability supports faster query execution.

Companies are expanding and looking for cloud data warehouses that can scale and thus easily integrate with other cloud services, like AWS, Azure, and Google Cloud Platform, and bring impact and value to the company. Amazon Redshift is one of the cloud data warehouses on AWS that engineers like because it allows them to size their data warehouse for their core workload while easily scaling for spikes in users and data to balance performance and costs.

Cost Optimization

Cost optimization is another best practice while using a data warehouse. Many companies earlier used on-premise data warehouses, which are costly at times. The rise of cloud data warehouses has made it so much easier to only pay for what you use because they use the pay-as-you-use model. It is, therefore, important for companies to migrate to the cloud and onboard cloud architects for a smooth transition.

Performance

Performance is a key concept in data warehousing. Therefore, query optimization techniques should be used for writing efficient and faster SQL queries. A data engineer is therefore advised to have a solid understanding of SQL.

Agility

An agile data warehousing approach is key in this as it helps organizations transition from reporting and historical analysis to more advanced, predictive analytics. It also supports incorporating new data types and structures into complex behavioral models, resulting in previously unattainable levels of understanding in the data warehouse.

Conclusion

In this article, we have gone through the best practices of data warehousing, which will help data engineers and data warehouse managers design the best data warehouse as per their business use case.

For an organization to make informed decisions, they need to have the right data. Thus, they need data warehouses to provide the relevant data to serve data scientists, data analysts, and business intelligence professionals.

Thanks for reading. Let's keep learning!